Avoid The top 10 Mistakes Made By Starting Deepseek

페이지 정보

작성자 Senaida 작성일25-01-31 09:33 조회11회 댓글0건관련링크

본문

3; and meanwhile, it's the Chinese fashions which traditionally regress essentially the most from their benchmarks when utilized (and DeepSeek fashions, whereas not as unhealthy as the remainder, nonetheless do that and r1 is already trying shakier as folks try out heldout problems or benchmarks). All these settings are something I will keep tweaking to get one of the best output and I'm additionally gonna keep testing new models as they turn out to be accessible. Get started by installing with pip. DeepSeek-VL collection (together with Base and Chat) helps commercial use. We launch the DeepSeek-VL household, together with 1.3B-base, 1.3B-chat, 7b-base and 7b-chat fashions, to the general public. The collection includes 4 models, 2 base fashions (DeepSeek-V2, deepseek DeepSeek-V2-Lite) and a pair of chatbots (-Chat). However, the knowledge these fashions have is static - it does not change even because the precise code libraries and APIs they rely on are continually being updated with new options and modifications. A promising course is the usage of large language models (LLM), which have confirmed to have good reasoning capabilities when educated on giant corpora of text and math. But when the area of possible proofs is considerably massive, the fashions are nonetheless gradual.

It might probably have vital implications for applications that require looking over an enormous house of doable options and have tools to confirm the validity of model responses. CityMood offers local authorities and municipalities with the latest digital analysis and demanding tools to supply a transparent picture of their residents’ needs and priorities. The research exhibits the ability of bootstrapping models by synthetic knowledge and getting them to create their own coaching data. AI labs corresponding to OpenAI and Meta AI have also used lean of their analysis. This guide assumes you've gotten a supported NVIDIA GPU and have put in Ubuntu 22.04 on the machine that may host the ollama docker picture. Follow the directions to install Docker on Ubuntu. Note once more that x.x.x.x is the IP of your machine hosting the ollama docker container. By hosting the model on your machine, you acquire larger management over customization, enabling you to tailor functionalities to your specific needs.

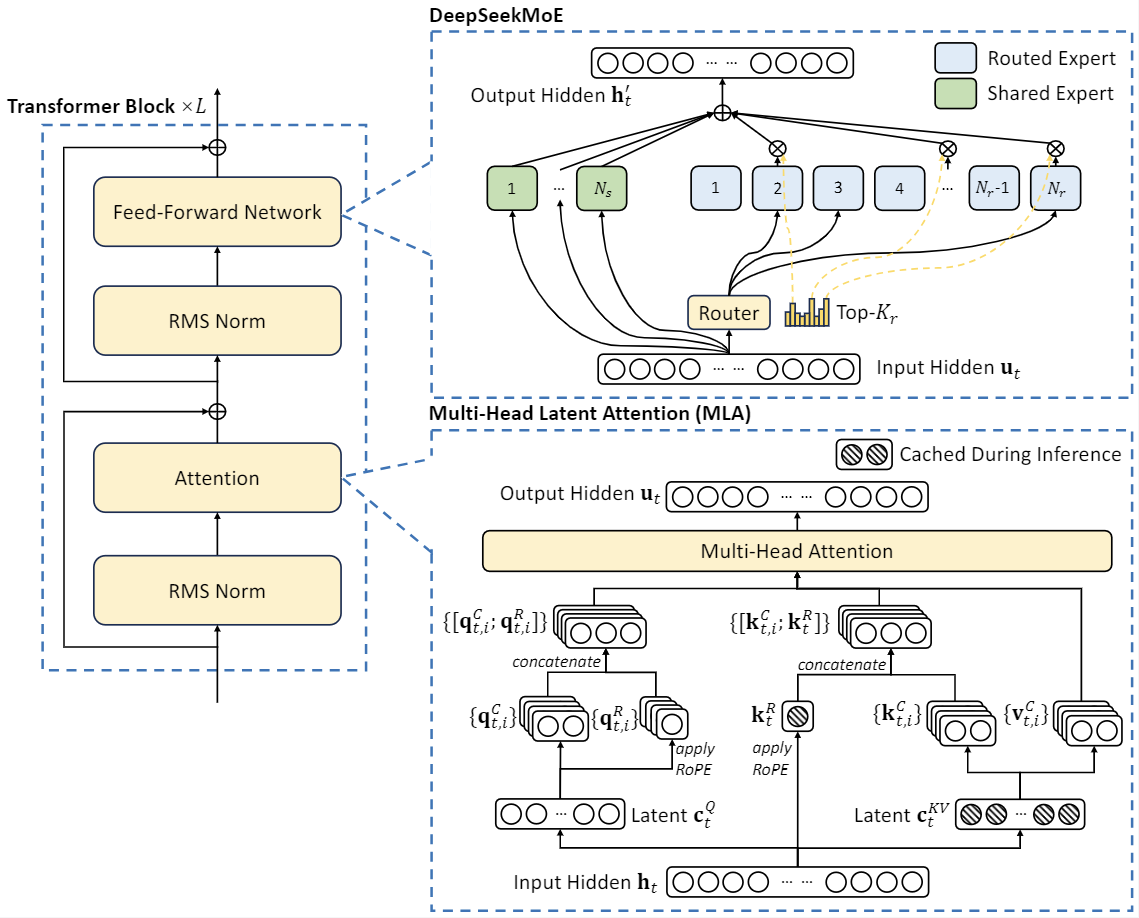

The usage of DeepSeek-VL Base/Chat models is topic to DeepSeek Model License. However, to solve advanced proofs, these fashions have to be wonderful-tuned on curated datasets of formal proof languages. One thing to take into consideration because the method to building high quality training to show individuals Chapel is that in the intervening time the best code generator for different programming languages is Deepseek Coder 2.1 which is freely obtainable to use by folks. American Silicon Valley enterprise capitalist Marc Andreessen likewise described R1 as "AI's Sputnik second". SGLang at the moment supports MLA optimizations, FP8 (W8A8), FP8 KV Cache, and Torch Compile, providing the very best latency and throughput amongst open-source frameworks. Compared with DeepSeek 67B, DeepSeek-V2 achieves stronger efficiency, and meanwhile saves 42.5% of training costs, reduces the KV cache by 93.3%, and boosts the utmost era throughput to 5.76 instances. The unique mannequin is 4-6 occasions dearer but it is 4 times slower. I'm having more hassle seeing how to read what Chalmer says in the best way your second paragraph suggests -- eg 'unmoored from the unique system' doesn't seem like it's talking about the identical system producing an advert hoc clarification.

This method helps to shortly discard the unique assertion when it's invalid by proving its negation. Automated theorem proving (ATP) is a subfield of mathematical logic and computer science that focuses on growing computer packages to automatically show or disprove mathematical statements (theorems) within a formal system. DeepSeek-Prover, the mannequin trained by way of this technique, achieves state-of-the-art performance on theorem proving benchmarks. The benchmarks largely say sure. People like Dario whose bread-and-butter is model performance invariably over-index on mannequin efficiency, particularly on benchmarks. Your first paragraph makes sense as an interpretation, which I discounted as a result of the idea of something like AlphaGo doing CoT (or applying a CoT to it) appears so nonsensical, since it is not in any respect a linguistic model. Voila, you have got your first AI agent. Now, build your first RAG Pipeline with Haystack components. What's stopping folks right now's that there is not enough people to build that pipeline fast enough to make the most of even the present capabilities. I’m pleased for individuals to make use of basis models in an identical way that they do right now, as they work on the big drawback of the right way to make future extra highly effective AIs that run on something nearer to formidable worth studying or CEV versus corrigibility / obedience.

This method helps to shortly discard the unique assertion when it's invalid by proving its negation. Automated theorem proving (ATP) is a subfield of mathematical logic and computer science that focuses on growing computer packages to automatically show or disprove mathematical statements (theorems) within a formal system. DeepSeek-Prover, the mannequin trained by way of this technique, achieves state-of-the-art performance on theorem proving benchmarks. The benchmarks largely say sure. People like Dario whose bread-and-butter is model performance invariably over-index on mannequin efficiency, particularly on benchmarks. Your first paragraph makes sense as an interpretation, which I discounted as a result of the idea of something like AlphaGo doing CoT (or applying a CoT to it) appears so nonsensical, since it is not in any respect a linguistic model. Voila, you have got your first AI agent. Now, build your first RAG Pipeline with Haystack components. What's stopping folks right now's that there is not enough people to build that pipeline fast enough to make the most of even the present capabilities. I’m pleased for individuals to make use of basis models in an identical way that they do right now, as they work on the big drawback of the right way to make future extra highly effective AIs that run on something nearer to formidable worth studying or CEV versus corrigibility / obedience.

댓글목록

등록된 댓글이 없습니다.