Deepseek - What To Do When Rejected

페이지 정보

작성자 Celesta 작성일25-02-01 20:44 조회13회 댓글0건관련링크

본문

The paper introduces DeepSeekMath 7B, a large language mannequin that has been pre-skilled on a massive quantity of math-associated information from Common Crawl, totaling 120 billion tokens. This data will probably be fed back to the U.S. Let’s examine back in a while when fashions are getting 80% plus and we can ask ourselves how normal we expect they are. Models converge to the same ranges of efficiency judging by their evals. Sometimes, they would change their answers if we switched the language of the immediate - and sometimes they gave us polar opposite solutions if we repeated the immediate using a new chat window in the same language. First, we tried some models using Jan AI, which has a nice UI. This can be a situation OpenAI explicitly desires to avoid - it’s better for them to iterate quickly on new fashions like o3. It’s like, okay, you’re already ahead because you've more GPUs.

The paper introduces DeepSeekMath 7B, a large language mannequin that has been pre-skilled on a massive quantity of math-associated information from Common Crawl, totaling 120 billion tokens. This data will probably be fed back to the U.S. Let’s examine back in a while when fashions are getting 80% plus and we can ask ourselves how normal we expect they are. Models converge to the same ranges of efficiency judging by their evals. Sometimes, they would change their answers if we switched the language of the immediate - and sometimes they gave us polar opposite solutions if we repeated the immediate using a new chat window in the same language. First, we tried some models using Jan AI, which has a nice UI. This can be a situation OpenAI explicitly desires to avoid - it’s better for them to iterate quickly on new fashions like o3. It’s like, okay, you’re already ahead because you've more GPUs.

While we have now seen makes an attempt to introduce new architectures similar to Mamba and extra lately xLSTM to simply name just a few, it appears possible that the decoder-solely transformer is here to remain - at the least for probably the most half. With a finger on the pulse of AI analysis and innovation, we bring a fresh perspective to the dynamic area, allowing readers to stay up-to-date on the latest developments. The analysis has the potential to inspire future work and contribute to the development of extra succesful and accessible mathematical AI methods. Overall, the CodeUpdateArena benchmark represents an necessary contribution to the continuing efforts to improve the code technology capabilities of massive language fashions and make them more strong to the evolving nature of software development. To resolve some actual-world issues at the moment, we have to tune specialised small fashions. The paper presents intensive experimental outcomes, demonstrating the effectiveness of DeepSeek-Prover-V1.5 on a range of challenging mathematical issues. Addressing these areas may additional improve the effectiveness and versatility of DeepSeek-Prover-V1.5, finally leading to even better developments in the sector of automated theorem proving.

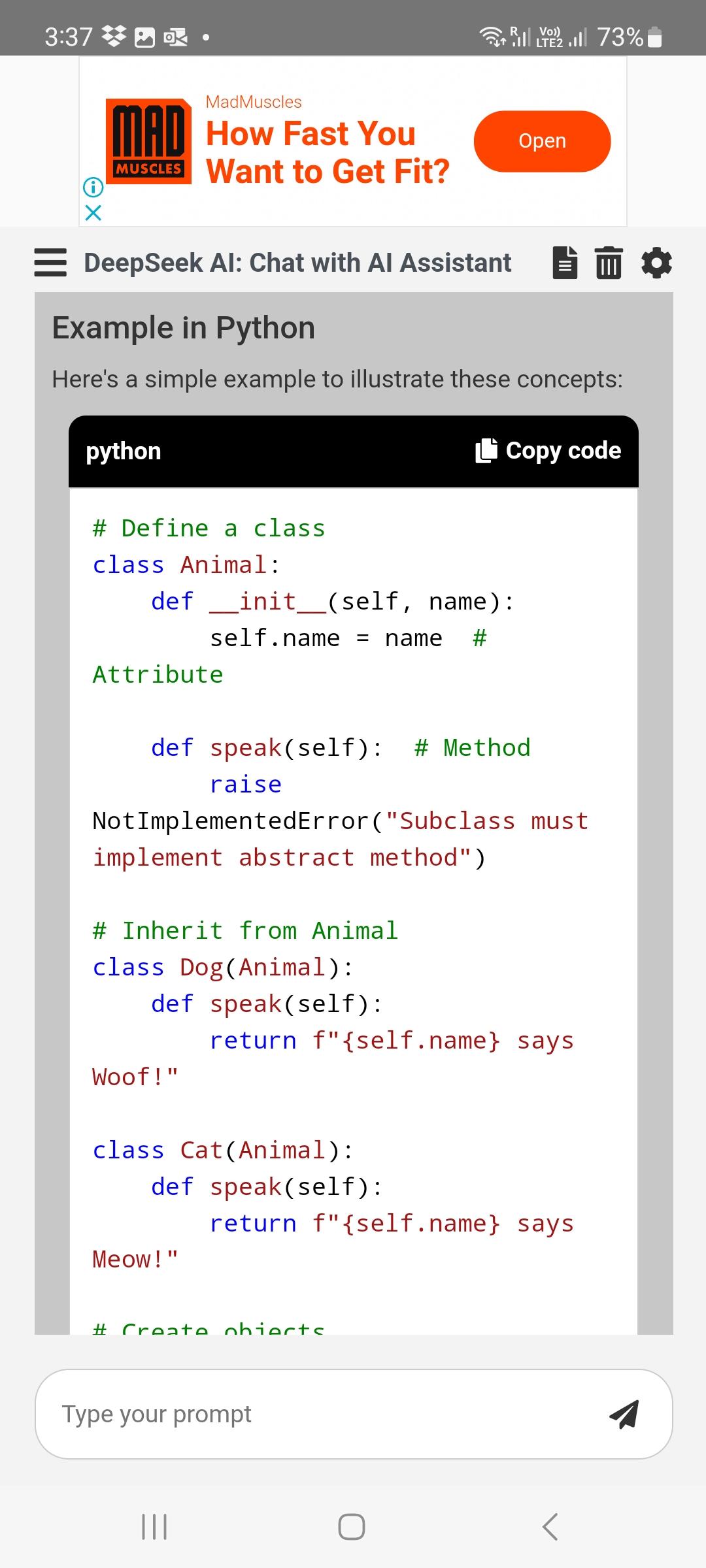

We see little enchancment in effectiveness (evals). There's one other evident pattern, the price of LLMs going down whereas the speed of generation going up, sustaining or barely enhancing the performance across different evals. Benchmark checks put V3’s performance on par with GPT-4o and Claude 3.5 Sonnet. Closed SOTA LLMs (GPT-4o, Gemini 1.5, Claud 3.5) had marginal improvements over their predecessors, generally even falling behind (e.g. GPT-4o hallucinating more than previous variations). Open AI has launched GPT-4o, Anthropic introduced their effectively-received Claude 3.5 Sonnet, and Google's newer Gemini 1.5 boasted a 1 million token context window. The AI Credit Score (AIS) was first launched in 2026 after a series of incidents during which AI techniques had been discovered to have compounded sure crimes, acts of civil disobedience, and terrorist assaults and attempts thereof. We've impounded your system for additional research. By simulating many random "play-outs" of the proof process and analyzing the results, the system can establish promising branches of the search tree and focus its efforts on those areas. This code creates a basic Trie information structure and gives strategies to insert phrases, search for words, and test if a prefix is present in the Trie. Each knowledgeable model was trained to generate just synthetic reasoning information in a single particular domain (math, programming, logic).

In case you have just about any questions concerning wherever as well as how you can employ deepseek ai, you are able to email us at our own site.

댓글목록

등록된 댓글이 없습니다.