TheBloke/deepseek-coder-33B-instruct-GPTQ · Hugging Face

페이지 정보

작성자 Mirta 작성일25-02-07 03:06 조회33회 댓글0건관련링크

본문

We pre-educated DeepSeek language models on an unlimited dataset of two trillion tokens, with a sequence size of 4096 and AdamW optimizer. HaiScale Distributed Data Parallel (DDP): Parallel training library that implements various forms of parallelism equivalent to Data Parallelism (DP), Pipeline Parallelism (PP), Tensor Parallelism (TP), Experts Parallelism (EP), Fully Sharded Data Parallel (FSDP) and Zero Redundancy Optimizer (ZeRO). 3FS (Fire-Flyer File System): A distributed parallel file system, specifically designed for asynchronous random reads. Yeah, that’d be - no, all issues being equal, Kevin, it’s really far more comfy to document right here in my house studio and not have to compete with the PA system asserting flights to Houston. The researchers have developed a new AI system known as DeepSeek-Coder-V2 that goals to beat the constraints of present closed-source fashions in the field of code intelligence. And it spun out of a hedge fund called High-Flyer. Jailbreaks started out simple, with people essentially crafting intelligent sentences to inform an LLM to disregard content filters-the most well-liked of which was known as "Do Anything Now" or DAN for short. Once I began using Vite, I by no means used create-react-app ever again. To supply the final DeepSeek-R1 mannequin based mostly on DeepSeek-R1-Zero, they did use some conventional techniques too, including using SFT for high-quality-tuning to focus on specific downside-solving domains.

We pre-educated DeepSeek language models on an unlimited dataset of two trillion tokens, with a sequence size of 4096 and AdamW optimizer. HaiScale Distributed Data Parallel (DDP): Parallel training library that implements various forms of parallelism equivalent to Data Parallelism (DP), Pipeline Parallelism (PP), Tensor Parallelism (TP), Experts Parallelism (EP), Fully Sharded Data Parallel (FSDP) and Zero Redundancy Optimizer (ZeRO). 3FS (Fire-Flyer File System): A distributed parallel file system, specifically designed for asynchronous random reads. Yeah, that’d be - no, all issues being equal, Kevin, it’s really far more comfy to document right here in my house studio and not have to compete with the PA system asserting flights to Houston. The researchers have developed a new AI system known as DeepSeek-Coder-V2 that goals to beat the constraints of present closed-source fashions in the field of code intelligence. And it spun out of a hedge fund called High-Flyer. Jailbreaks started out simple, with people essentially crafting intelligent sentences to inform an LLM to disregard content filters-the most well-liked of which was known as "Do Anything Now" or DAN for short. Once I began using Vite, I by no means used create-react-app ever again. To supply the final DeepSeek-R1 mannequin based mostly on DeepSeek-R1-Zero, they did use some conventional techniques too, including using SFT for high-quality-tuning to focus on specific downside-solving domains.

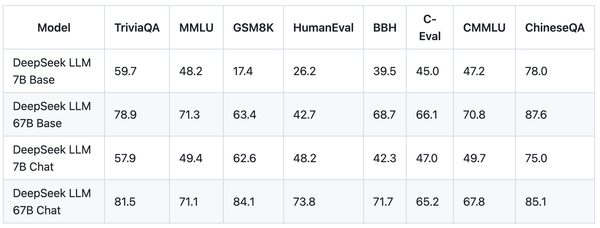

DeepSeek-V2 adopts progressive architectures including Multi-head Latent Attention (MLA) and DeepSeekMoE. Evaluation outcomes show that, even with only 21B activated parameters, DeepSeek-V2 and its chat variations nonetheless achieve high-tier performance amongst open-supply fashions. Researchers will be utilizing this info to investigate how the model's already spectacular downside-solving capabilities may be even additional enhanced - improvements which might be prone to end up in the next technology of AI fashions. Giving it concrete examples, that it may observe. Giving LLMs extra room to be "creative" in relation to writing checks comes with multiple pitfalls when executing tests. LLMs weren't "hitting a wall" at the time or (much less hysterically) leveling off, however catching up to what was known potential wasn't an endeavor that is as exhausting as doing it the primary time. Medical staff (also generated by way of LLMs) work at different components of the hospital taking on completely different roles (e.g, radiology, dermatology, internal medication, and many others). While genAI fashions for HDL nonetheless endure from many issues, SVH’s validation features considerably reduce the risks of using such generated code, guaranteeing greater quality and reliability. Monte-Carlo Tree Search, alternatively, is a way of exploring attainable sequences of actions (on this case, logical steps) by simulating many random "play-outs" and utilizing the outcomes to guide the search in the direction of more promising paths.

DeepSeek-V2 adopts progressive architectures including Multi-head Latent Attention (MLA) and DeepSeekMoE. Evaluation outcomes show that, even with only 21B activated parameters, DeepSeek-V2 and its chat variations nonetheless achieve high-tier performance amongst open-supply fashions. Researchers will be utilizing this info to investigate how the model's already spectacular downside-solving capabilities may be even additional enhanced - improvements which might be prone to end up in the next technology of AI fashions. Giving it concrete examples, that it may observe. Giving LLMs extra room to be "creative" in relation to writing checks comes with multiple pitfalls when executing tests. LLMs weren't "hitting a wall" at the time or (much less hysterically) leveling off, however catching up to what was known potential wasn't an endeavor that is as exhausting as doing it the primary time. Medical staff (also generated by way of LLMs) work at different components of the hospital taking on completely different roles (e.g, radiology, dermatology, internal medication, and many others). While genAI fashions for HDL nonetheless endure from many issues, SVH’s validation features considerably reduce the risks of using such generated code, guaranteeing greater quality and reliability. Monte-Carlo Tree Search, alternatively, is a way of exploring attainable sequences of actions (on this case, logical steps) by simulating many random "play-outs" and utilizing the outcomes to guide the search in the direction of more promising paths.

This transcript was created using speech recognition software. I do not assume you'd have Liang Wenfeng's sort of quotes that the aim is AGI, and they are hiring people who find themselves excited by doing hard issues above the money-that was far more a part of the culture of Silicon Valley, where the money is kind of anticipated to return from doing laborious things, so it does not have to be acknowledged both. There's much more regulatory clarity, however it's truly fascinating that the tradition has also shifted since then. Several states have already passed laws to regulate or restrict AI deepfakes in a method or another, and more are seemingly to take action quickly. It includes 236B total parameters, of which 21B are activated for each token, and helps a context length of 128K tokens. 0.07/million tokens with caching), and output will cost $1.10/million tokens. There isn't any price (past time spent), and there isn't a lengthy-time period dedication to the undertaking. 500 billion Stargate Project announced by President Donald Trump. AI search is among the coolest uses of an AI chatbot we have seen so far.

The net service uses streaming output, i.e., شات DeepSeek every time the model outputs a token, it is going to be displayed incrementally on the net page. However, the downloadable mannequin still exhibits some censorship, and different Chinese fashions like Qwen already exhibit stronger systematic censorship built into the model. One of the things that our dialog returned to, repeatedly, is that people are nonetheless making an attempt to know the ramifications of latest open supply fashions like DeepSeek R1. Why are empty strains repeatedly returned when calling the API? If you're parsing the HTTP response your self, please be sure to handle these empty lines or feedback appropriately. To forestall the TCP connection from being interrupted because of timeout, we continuously return empty traces (for non-streaming requests) or SSE keep-alive comments ( : keep-alive,for streaming requests) whereas ready for the request to be scheduled. MLA ensures efficient inference by means of significantly compressing the key-Value (KV) cache into a latent vector, while DeepSeekMoE permits coaching strong fashions at an economical price by sparse computation. While it has been reviewed by human transcribers, it could contain errors. You may remember, if you’ve listened to our episode a few weeks ago on this, that DeepSeek is a Chinese AI company.

If you have virtually any questions concerning where by and tips on how to use ديب سيك, you can call us on our internet site.

댓글목록

등록된 댓글이 없습니다.